Meta Llama 2 vs. OpenAI GPT-4: A Comparative Analysis of an Open-Source vs. Proprietary LLM

Introduction In July 2023, Meta took a bold stance in the generative AI space by open-sourcing its...

Pre-trained Large Language Models (LLMs), such as OpenAI’s GPT-4 and Meta’s Llama 2, have grown in popularity and availability, playing a critical role in application development using generative AI. As a software developer, how can you quickly include LLM-powered capabilities in your application? A tech stack is emerging for interacting with these LLMs via in-context learning, often referred to as the emerging llm app stack.

In this article, we’ll define in-context learning and explore each layer of the emerging tech stack, also known as the llm app stack, which serves as a reference architecture for AI startups and tech companies:

Recall that the ready-to-use LLMs, such as GPT-4 and Llama 2, are foundation models pre-trained on a massive amount of publicly available online data sources, including Common Crawl (an archive of billions of webpages), Wikipedia (a community-based online encyclopedia), and Project Gutenberg (a collection of public domain books). GPT-4's model size is estimated to be ~1.76T parameters, while Llama 2's model size is 70B parameters. Due to the breadth of the pre-training data, these LLMs are suitable for generalized use cases. However, adjustment of the pre-trained LLMs is likely needed to make them appropriate for your application's specific scenarios.

There are two general approaches for tailoring the pre-trained LLMs to your unique use cases: fine-tuning training models and in-context learning.

Fine-tuning involves additional training of a pre-trained LLM by providing it with a smaller, domain-specific, and proprietary dataset. This process will alter the parameters of the LLM, and thus modify the “model's knowledge bank” to be more specialized.

As of December 2023, fine-tuning for GPT-3.5 Turbo is available via OpenAI's official API. Meanwhile, fine-tuning of GPT-4 is offered via an experimental access program and “eligible users will be presented with an option to request access in the fine-tuning UI.”

Fine-tuning for Llama 2 can be performed via a machine learning platform, such as Google Colab, and free LLM libraries from Hugging Face. Here's an online tutorial written by DataCamp.

The advantages of fine-tuning:

Typically higher-quality outputs than prompting

Capacity for more training examples than prompting

Lower costs and lower-latency requests after the fine-tuning process due to shorter prompts

The disadvantages of fine-tuning:

Machine learning expertise is required and resource-intensive for fine-tuning process

Risk of losing the pre-trained model's previously learned skills, known as Catastrophic Forgetting, while gaining new skills

Challenge of overfitting, which occurs when a model fits exactly or too closely to its training dataset, hindering it from generalizing or predicting with new unseen inputs

In-context learning doesn’t change the underlying pre-trained model. It guides the LLM output via structured prompting and relevant retrieved data, providing the model with “the right information at the right time.”

To condition the LLM to perform a more specific task and to provide output in a particular format, the few-shot prompting technique can be used. In addition to the main query, examples of expected input and output pairs are passed to the LLM as part of the input context. Recall that an LLM’s context, composed of tokenized data, can be likened to the “attention span of the model.” The example pairs almost act like a targeted, mini training dataset.

A compiled prompt typically combines various elements such as a hard-coded prompt template, few-shot examples, information from external APIs, and relevant documents retrieved from a vector database.

As the pre-trained LLMs were trained on publicly available online data and licensed datasets with a cutoff date, they are unaware of more recent events and private data. To provide an LLM with additional source knowledge, the retrieval augmented generation (RAG) technique can be used. Any extra required information outside of the LLM may be retrieved and passed along as part of the input context. Relevant data may come from multiple sources, such as vector or SQL databases, internal or external APIs, and document repositories.

As of December 2023, GPT-4 Turbo offers a 128,000 tokens maximum context length, while Llama 2 supports a 4,096 tokens maximum context length.

The advantages of in-context learning:

No machine learning expertise is required and less resource-intensive than fine-tuning

No risk of breaking the underlying pre-trained model

Separate management of specialized and proprietary data

The disadvantages of in-context learning:

Typically lower-quality outputs than fine-tuning

LLM's maximum context length constraint

Higher costs and higher-latency requests due to longer prompts

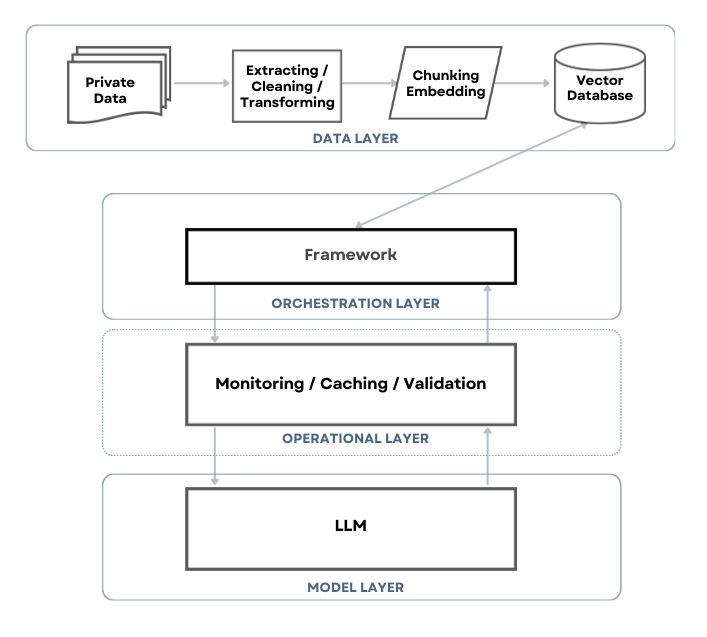

A diagram of the layers and major components in the emerging LLM tech stack.

Source: Inspired by Emerging Architectures for LLM Applications

written by Matt Bornstein and Rajko Radovanovic & LLMs and the Emerging ML Tech Stack written by Harrison Chase and Brian Raymond.

The emerging LLM tech stack can be separated into three main layers and one supplementary layer:

Data Layer - preprocessing and storing embeddings of private data

Orchestration Layer - coordinating all the various parts, retrieving relevant information, and constructing the prompt

Operational Layer (supplementary) - additional tooling for monitoring, caching, and validation. These operational systems enhance the functionality and efficiency of interactions with LLMs.

Model Layer - the LLM to be accessed for prompt execution

We will be walking through the Data, Model, Orchestration, and Operational Layers with a simple application example: a customer service chatbot that knows our company’s products, policies, and FAQs.

The data layer is involved with the full data preprocessing and storage of private and supplementary information. The data processing can be broken down into three main steps: extracting, embedding, and storing. Local vector management libraries like Chroma and Faiss play a crucial role in data processing and embedding for smaller applications and development experiments, although they may not provide the scalability needed for larger production environments compared to robust vector databases.

|

DATA LAYER |

||

|

Extracting |

Embedding |

Storing |

|

Data Pipelines: Airbyte (open-source) Airflow (open-source) Databricks (commercial) Unstructured (commercial) |

Embedding Models: Cohere (commercial) OpenAI Ada (commercial) Sentence Transformers library from Hugging Face (open-source) |

Vector Databases: Chroma (open-source) Pinecone (commercial) Qdrant (open-source) Weaviate (commercial) |

|

Document Loaders: LangChain (open-source) LlamaIndex (open-source) |

Chunking Libraries: LangChain (open-source) Semantic Kernel (open-source) |

Databases w/ Vector Search: Elasticsearch (commercial) PostgreSQL pgvector (open-source) Redis (open-source) |

A table of available offerings for the Data Layer (as of December 2023, not exhaustive).

Source: Inspired by Emerging Architectures for LLM Applications written by Matt Bornstein and Rajko Radovanovic & The New Language Model Stack

written by Michelle Fradin and Lauren Reeder.

Relevant data may come from multiple sources in a variety of formats. Hence, connectors are established to ingest data from wherever they are located for extraction.

For our customer service chatbot, we may have a cloud storage bucket of internal Word documents and PDFs, PowerPoint presentations, and scraped HTML of the company website. Perhaps we have client information in a customer relationship management (CRM) hub accessed via an external API or a product catalog stored in an SQL database. Other sources could be team processes noted in a collaborative wiki or previous user support emails. Simply put, gather all the various data for your specific use cases.

There is an optional step of cleaning the extracted data by removing unnecessary or confidential parts. Another optional step is transforming the extracted data into a standardized format, such as JSON, for efficient processing downstream.

Depending on your data complexity and scale, document loaders or data pipelines may be suitable. If you have a small number of data sources with data that change infrequently stored in common formats (text, CSV, HTML, JSON, XML, etc.) requiring minimal processing, then the simpler document loaders will suffice. The document loaders can connect to your data sources and offer basic capabilities for extracting and transforming your content. However, if you have to aggregate many diverse and massive data sources containing real-time streams with more intensive processing, then data pipelines are more appropriate. Data pipelines are designed for higher scalability and flexibility of data sources as well as lower latency in processing.

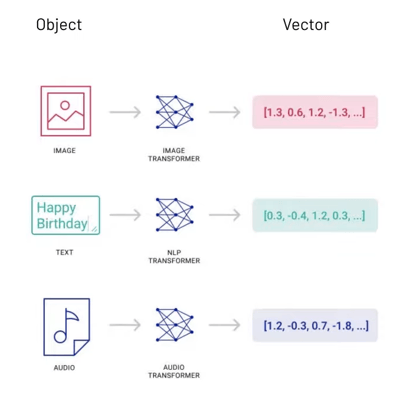

An embedding is a numerical representation capturing semantic meaning and expressed as a vector. Embeddings can be compared, such as by calculating the distance between them, to see how related they are. Creating embeddings of the extracted data allows for the unstructured data to be quickly classified and searched.

A diagram showing vector data.

Source: Pinecone The Rise of Vector Data

To create embeddings of the extracted data requires an embedding model, for example, OpenAI's Ada V2. The model takes the input text, usually as a string or array of tokens, and returns the embedding output. Ada V2 also accepts multiple inputs in a single request by passing an array of strings or an array of token arrays. As of December 2023, Ada V2 can be accessed via the API endpoint at “https://api.openai.com/v1/embeddings.”

An optional step is chunking, which is breaking up the input text into smaller fragments or chunks. There are libraries supporting different chunking methods, such as basic fixed-size or advanced sentence-splitting. Chunking is needed if the input text is very large as embedding models have a size limit. At the time of writing, Ada V2 has a maximum input length of 8,192 tokens and an array can't exceed 2,048 dimensions.

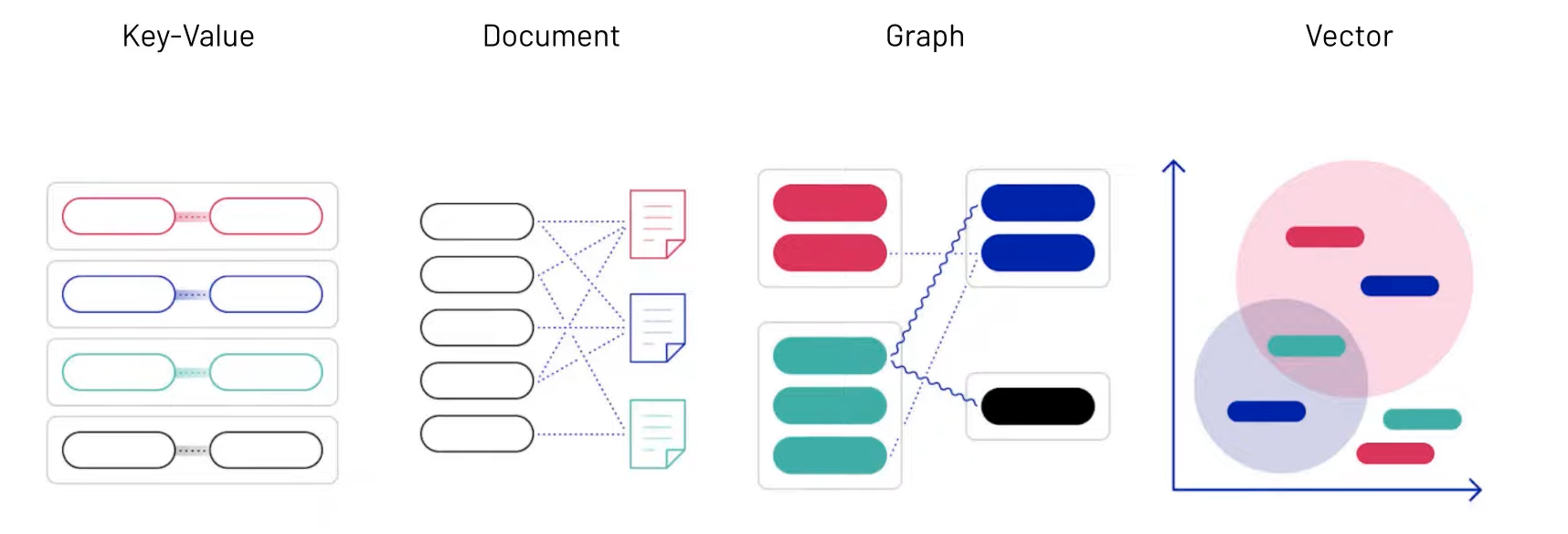

The generated embeddings, along with the original extracted data, are then stored in a specialized database called a vector database or a traditional database integrated with a vector search extension.

A vector database is a type of database optimized for the indexing and storage of vector data, enabling quick retrieval and similarity search. It offers common database CRUD operations and is designed for scalability, real-time updates, and data security.

Adding a vector search extension to an existing traditional database (SQL or NoSQL) is generally an easier and quicker transition process because it leverages current database infrastructure and expertise. However, this approach works best for simple vector search functionality. Inherently, traditional databases and vector databases are architectured based on different priorities. For instance, relational databases value consistency and atomicity of transactions. Whereas vector databases value search speed and availability, thus “[tolerating] the eventual consistency of updates.” Retrofitting vector search capability into traditional databases may compromise performance. Having a dedicated vector database also means its operation and maintenance are separate from the existing database.

A diagram of various types of data storage.

Source: Pinecone The Rise of Vector Data

The model layer contains the off-the-shelf LLM to be used for your application development, such as GPT-4 or Llama 2. Select the LLM suitable for your specific purposes as well as requirements on costs, performance, and complexity. For a customer service chatbot, we may use GPT-4, which is optimized for conversations, offers robust multilingual support, and has advanced reasoning capabilities.

Proprietary model APIs play a crucial role in the inference process, including submitting prompts to a pre-trained language model along with other systems like logging and caching.

The access method depends on the specific LLM, whether it is proprietary or open-source, and how the model is hosted. Typically, there will be an API endpoint for LLM inference, or prompt execution, which receives the input data and produces the output. At the time of writing, the API endpoint for GPT-4 is “https://api.openai.com/”

|

MODEL LAYER |

|

Proprietary LLMs: Anthropic’s Claude (closed beta) |

|

Open-Source LLMs: Hugging Face (many models available) |

A table of available offerings for the Model Layer (as of December 2023, not exhaustive).

Source: Inspired by Emerging Architectures for LLM Applications written by Matt Bornstein and Rajko Radovanovic & The New Language Model Stack

written by Michelle Fradin and Lauren Reeder.

The orchestration layer consists of the main framework that is responsible for coordinating with the other layers and any external components. The framework offers tools and abstractions for working with the major parts of the LLM tech stack. It provides libraries and templates for common tasks, such as prompt construction and execution. In a way, it resembles the controller in the Model-View-Controller (MVC) architectural design pattern.

With the in-context learning approach, the orchestration framework will take in the user query, construct the prompt based on a template and valid examples, retrieve relevant data with a similarity search to the vector database, perhaps fetch other necessary information from APIs, and then submit the entire data pipeline as contextual input to the LLM at the specified endpoint. The framework then receives and processes the LLM output.

For our simple customer service chatbot, suppose the user asks about the refund policy:

A prompt template has already been set up with instructions and examples.

The instruction is “You are a helpful and courteous customer service representative that responds to the user's inquiry: {query}. Here are some example conversations.”

A couple of examples: [{input: “Where is your headquarters located?”, output: “Our company headquarters is located in Los Angeles, CA.”}, {input: “Can you check my order status?”, output: “Yes, I can help with checking the status of your order.”}].

The framework queries the vector database for relevant data related to “refund policy” and gets the results: “Refunds are allowed for new and unused items within 30 days of purchase. Refunds are issued to the original form of payment within 3-5 business days.”

The framework sends the entire prompt with context information to the chosen LLM, GPT-4 in our case, for inference.

GPT-4 returns the output “We allow refunds on new and unused items within 30 days of purchase. You should receive your refund back to the original form of payment within 3-5 business days.”

The framework responds to the user with the LLM output.

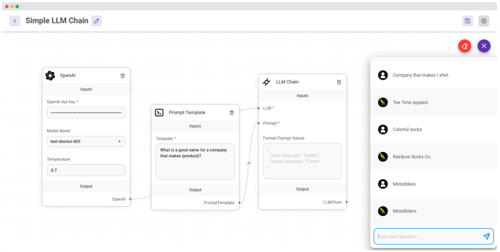

A simple LLM chain in Flowise visual tool.

Source: FlowiseAI

One example framework is LangChain (libraries available in JavaScript and Python), containing interfaces and integrations to common components as well as the ability to combine multiple steps into “chains.” Aside from programming frameworks, there are GUI frameworks available for orchestration. For instance, Flowise, built on top of LangChain, has a graphical layout for visually chaining together major components.

|

ORCHESTRATION LAYER |

|

Programming Frameworks: LangChain (open-source) Anarchy (open-source) |

|

GUI Frameworks: Flowise (open-source) Stack AI (commercial) |

A table of available offerings for the Orchestration Layer (as of December 2023, not exhaustive).

Source: Inspired by Emerging Architectures for LLM Applications written by Matt Bornstein and Rajko Radovanovic & The New Language Model Stack

written by Michelle Fradin and Lauren Reeder.

As LLM-powered applications go into production and scale, an operational layer (LLMOps) can be added for performance and reliability. The following lists some areas of LLMOps tooling:

Monitoring - Logging, tracking, and evaluating LLM outputs to provide insights into improving prompt construction and model selection.

Caching - Utilizing a semantic cache for storing LLM outputs to reduce LLM API calls and therefore lowering application response time and cost.

Validation - Checking the LLM inputs to detect prompt injection attacks, which maliciously manipulate LLM behavior and output. LLM outputs can also be validated and corrected based on specified rules.

For our customer service chatbot, the LLM requests and responses can be recorded and assessed later for accuracy and helpfulness. If many users ask about the refund policy, the answer can be retrieved from the cache instead of executing a prompt at the LLM endpoint. To guard against users entering malicious queries, the messages can be validated before prompt construction and inference. Incorporating these LLMOps tools makes our application more efficient and robust.

|

OPERATIONAL LAYER |

||

|

Monitoring |

Caching |

Validation |

|

Autoblocks (commercial) Helicone (commercial) HoneyHive (commercial) LangSmith (closed beta) Weights & Biases (commercial) |

GPTCache (open-source) Redis (open-source) |

Guardrails AI (open-source) Rebuff (open-source) |

A table of available offerings for the Operational Layer (as of December 2023, not exhaustive).

Source: Inspired by Emerging Architectures for LLM Applications written by Matt Bornstein and Rajko Radovanovic & The New Language Model Stack

written by Michelle Fradin and Lauren Reeder.

We have examined the fine-tuning and in-context learning approaches for adapting pre-trained models for your LLM application and determined that the latter is easier to start with. Currently, there is an emerging tech stack for interacting with LLMs via the in-context learning approach. It is composed of three main layers (data, model, and orchestration) and one supplementary layer (operational). Available tools in each layer can kickstart the development of your LLM-powered application.

Diana Cheung (ex-LinkedIn software engineer, USC MBA, and Codesmith alum) is a technical writer on technology and business. She is an avid learner and has a soft spot for tea and meows.

Introduction In July 2023, Meta took a bold stance in the generative AI space by open-sourcing its...

Behind many apps is a geospatial search service. Also known as proximity search, this allows users...

Introduction ChatGPT reached one million users in the first five days of product release. Needless...